What Is Ground Truth?

At the foundation of advanced driver-assistance systems (ADAS) is an environmental model that represents the world around a vehicle, built on data collected from sensors such as radar, cameras, lidar and ultrasonic sensors. As developers improve and refine the environmental models those sensors generate, they need to know how accurately the models represent the real world. That gold standard is called ground truth.

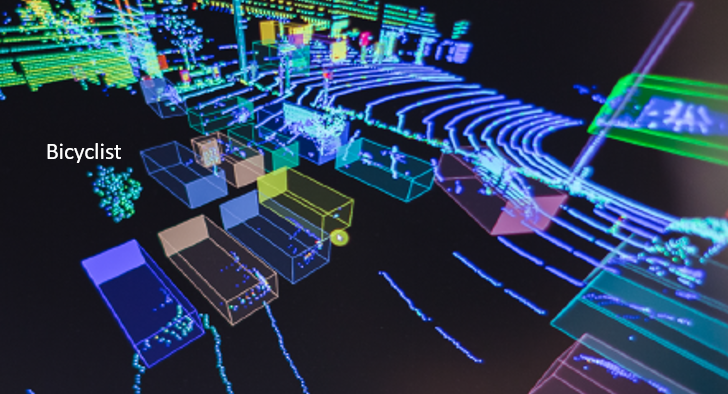

For example, if there is a bicyclist ahead of a vehicle, the developers need to make sure the sensors accurately detect that an object is there, determine the range to the object, measure the size of the object, measure the relative velocity of that object, and classify that object as a bicycle. Radar is great at detecting range and velocity – performing well even in adverse weather – and advanced radar systems are also able to determine elevation. Cameras are good at azimuth detection and object classification but struggle with accurate range detection. To begin a full assessment of the performance of these and other sensors, there first has to be a ground truth that establishes the “right answer” for all of the questions the sensors are trying to address.

To record ground truth, developers use test vehicles equipped with an array of extremely sensitive inertial measurement devices, highly accurate GPS equipment, lidar and cameras mounted to their roofs. While these sensors are much too expensive, bulky and impractical for use in everyday vehicles, they provide very high resolution in real time to create a highly accurate representation of the environment around the test vehicle.

Developers attach the sensors they are working on to the same vehicle, so the ground-truth sensors and the development sensors are time-aligned, operating in the same place with the same environment and with the same potential inputs. Testers drive the vehicles in a wide range of scenarios, both on closed test tracks and on public roads, and then bring the data back to a lab for analysis. Because of the high volume of data collected, testers upload it to the cloud to bring more processing power to bear and produce the ground-truth results in minutes. This ground-truth information can then help developers pull more refined information out of raw sensor data via artificial intelligence and machine learning.

Say, for example, that a car is outfitted with both ground-truth measurement equipment and test radar, and the radar detects a partially occluded object that the ground truth later identifies as a bicycle when the view is no longer blocked. Developers then use that data to tell machine learning software that the not-so-obvious pattern of radar returns coming from the object at that location represents a bicyclist. The next time that the software encounters a similar pattern, there is a better chance that it will be able to detect and classify that type of object — identifying a bicycle with greater confidence and without the help of ground-truth equipment.

This technique helps to democratize safety. By applying software intelligence to radar systems and other, less-expensive sensor hardware, OEMs can improve and extend advanced ADAS features to more vehicles, beyond just premium models.